Abdul Rahman Kreidieh, Glen Berseth, Brandon Trabucco, Samyak Parajuli, Sergey Levine, and Alexandre M. Bayen

UC Berkeley

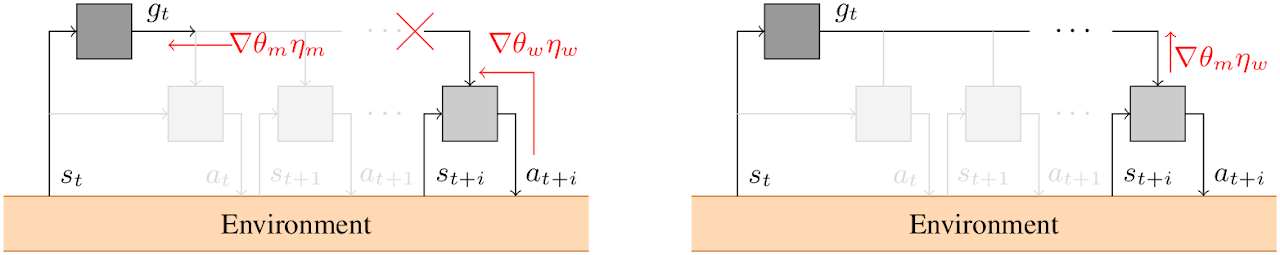

Motivated by studies on differentiable communication and emergent cooperation phenomena in MARL, we propose a novel optimization procedure to address limitations associated with inter-level cooperation in HRL. Our approach attempts to encourage cooperation between various levels of a hierarchy by redefining the objective of higher-level policies to directly account for losses experienced by lower-level policies, thereby allowing the policy to disambiguate goals with small expected returns from goals that were unachievable by the lower-level policy. The gradients associated with these additions to the loss of the higher-level policies are then propagated through its parameters by replacing the communication actions (or goals) by the higher-level policy during training with direct connections between its output and the input to the lower-level policy (see the right figure below).

#Results

We demonstrate improved performance over current HRL methods across a number of difficult long term planning tasks.

AntMaze

We present the performance of the CHER algorithm on a suite of continuous control tasks. The first of these, AntMaze, can be seen in the videos of below. In this task, the agent is tasked with reaching an arbitrary position in the maze, with the videos below representing the task of reaching each of the three corners. In this problem, both the standard HRL and CHER algorithms are capable of attaining approximately similar optimal solutions. The goals in both situations (represented by the blue ant) are also very distant from the agent and do not need to change frequently, but instead simply provide the ant with a direction of movement. The simplicity of the required higher level behavior is likely a factor explaining why inter-level cooperation is not required here.

| Normal HRL | CHER | ||||

| </table> </div> ##AntFourRooms In the AntFourRooms environment, we begin seeing the performative improvements that can arise from promoting inter-level cooperation between agents. In this environment, the agent is tasked to reach one of the three corners of the environment. While both standard HRL and CHER are capable of navigating to the adjacent rooms, the standard approach does not succeed in navigating to the diagonal room via any of the adjacent lane, but instead move the shortest distance, thereby colliding into the walls ahead. |

| Normal HRL | CHER |

| </table> </div> This post is based on the following paper: - Abdul Rahman Kreidieh, Glen Berseth, Brandon Trabucco, Samyak Parajuli, Sergey Levine, and Alexandre M. Bayen. |